New Website

09 Nov 2022The team from Rayo Estudio designed & developed an amazing new website -> https://celiacintas.io/. So all new papers, talks and overall news will be posted there now.

This static site will not be updated

The team from Rayo Estudio designed & developed an amazing new website -> https://celiacintas.io/. So all new papers, talks and overall news will be posted there now.

This static site will not be updated

Happy to announce the CFP for Workshops at ICLR 2023.

Workshops provide an informal, cutting edge venue for discussion of works in progress and future directions. Good workshops have helped to crystallize common problems, explicitly contrast competing frameworks, and clarify essential questions for a subfield or application area. Workshops are a structured means of bringing together people with common interests to form communities. Good workshops should include some form of community building.

Proposals should be submitted through an application using the CMT system.

Important dates for workshop submissions

The criteria and process by which proposals will be assessed are described in the Guidance for ICLR Workshop Proposals 2023.

We’re looking for short presentations (10 to 15 minutes) related to:

If you’re interested in presenting you work at TrustAI Workshop, please submit your response here before the 1st of August 2022.

Happy to announce the CFP of Practical Machine Learning for Developing Countries workshop at ICLR 2022. We encourage contributions that highlight challenges of learning in low resource environments that are typical in developing countries.

Deadline: February 25th 12:00 AM UTC.

Practical Machine Learning for Developing Countries (PML4DC) workshop is a full-day event that has been running regularly for the past 2 years in row at ICLR (past events include PML4DC 2020 and PML4DC 2021). PML4DC aims to foster collaborations and build a cross-domain community by featuring invited talks, panel discussions, contributed presentations (oral and poster) and round-table mixers.

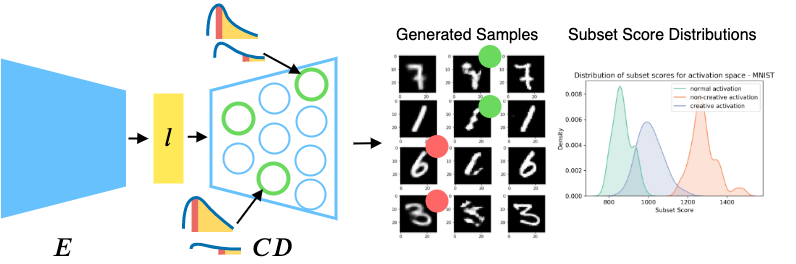

We’re going to be presenting some preliminary results in our work “Towards creativity characterization of generative models via group-based subset scanning” at Synthetic Data Generation Workshop at ICLR’21

Creativity is a process that provides novel and meaningful ideas. Current deep learning approaches open a new direction enabling the study of creativity from a knowledge acquisition perspective. Novelty generation using powerful deep generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), have been attempted. However, such models discourage out-of-distribution generation to avoid instability and decrease spurious sample generation, limiting their creative generation potential. We propose group-based subset scanning to quantify, detect, and characterize creative processes by detecting a subset of anomalous node-activations in the hidden layers of generative models. Our experiments on original, typically decoded, and “creatively decoded” (Das et al., 2020) image datasets reveal that the proposed subset scores distribution is more useful for detecting creative processes in the activation space rather than the pixel space. Further, we found that creative samples generate larger subsets of anomalies than normal or non-creative samples across datasets. Also, the node activations highlighted during the creative decoding process are different from those responsible for normal sample generation.